4、CV-CS231N

Lecture 1:

Lecture 2:Image Classification Pipeline

Lecture 3:Loss Function and Optimization

Lecture 4:Neural Networks and Backpropagation

Summary for today:

- (Fully-connected) Neural Networks are stacks of linear functions and nonlinear activation functions; they have much more representational power than linear classifiers

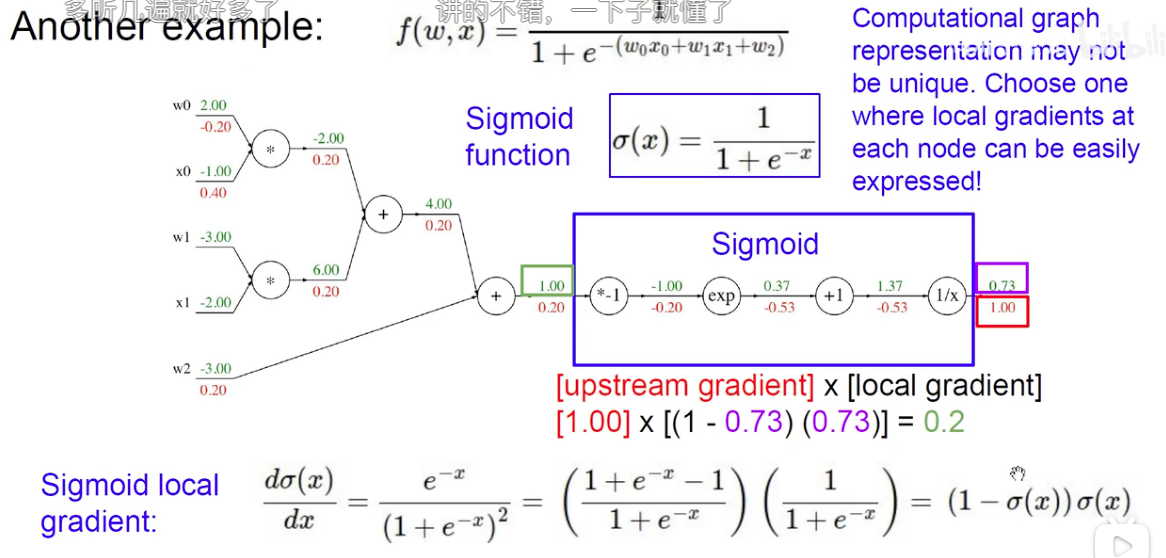

- backpropagation = recursive application of the chain rule along a computational graph to compute the gradients of all inputs/parameters/intermediates

- implementations maintain a graph structure, where the nodes implement the forward() / backward() API

- forward: compute result of an operation and save any intermediates needed for gradient computation in memory

- backward: apply the chain rule to compute the gradient of the loss function with respect to the inputs

非线性激活函数为神经网络带来非线性,否则堆多少个神经元都与单个线性层无异

本博客所有文章除特别声明外,均采用 CC BY-NC-SA 4.0 许可协议。转载请注明来自 Ruiqy~!

评论